Dynamically Relighting Digital Content from Real World Data

May 16, 2017

Dynamically Relighting Digital Content from Real World Data

Joe Abreu is a Software Developer at Blippar, responsible for improving the animation and rendering for the app and web platforms. He graduated has a degree in Computer Visualisation and Animation and has worked in the Augmented Reality space for over 5 years.

MOTIVATION

One of the difficult problems for Augmented Reality is blending virtual content into the real world seamlessly. AR content has a tendency to stand out from whatever environment it gets placed into and remove a user from believing that the AR content is really in front of them.

There are various ways to help digital content to blend well and these include:

- Tightly controlled lighting environments

- Soft lighting and shadows

- Introducing noise into the virtual content to mimic camera artifacts.

This can work wonders in situations where the developer knows beforehand the exact lighting condition and environment that the AR content will be viewed in.

However, the reality is AR content is often viewed outside of where the developers intended it to be used. Are they in their home? On the street? A shop or forest? Is it daytime or dusk?

If the AR content always looked the same in each of these situations it would be easy to distinguish the AR content from the real world.

The following technique is designed to overcome such limitations by relighting digital content based on the lighting environment that it is placed in.

EXPLANATION

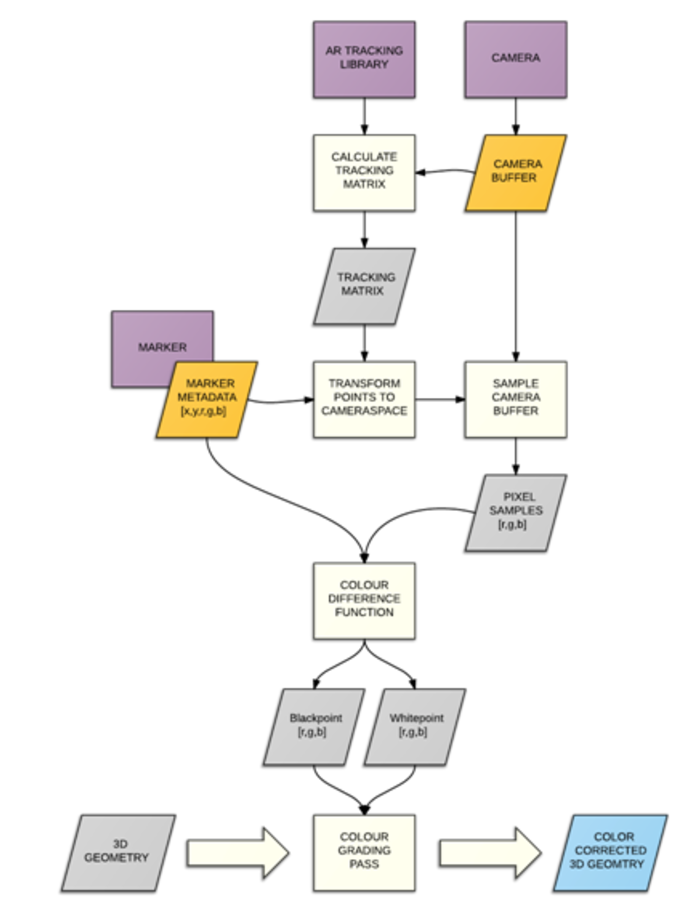

The overview of the technique is as follows:

- For every trackable marker store additional metadata. This metadata will include pixel samples and 2D positions in the image.

- For every frame read the pixel values from the camera at each sample point.

- Calculate the colour differences between the camera pixels and metadata pixels.

- Weight the colour differences and sum together to determine blackpoint and whitepoint colours.

- Colour correct digital content by the adding the blackpoint colour and multiplying the whitepoint colour.

High level flow chart explaining the relighting process.

The process is similar to how digital cameras perform automatic white balancing but the process is reversed. Automatic white balancing involves normalizing the colours from the camera feed to neutralize any colour temperature it picks up. While our technique will determine the colour temperature or luminance identified from the camera and adjust the virtual content to match.

To identify the camera's colour temperature we need some information about the world. Thankfully, the trackable marker can be used for this purpose. Using specific pixels from the marker we can find out how our camera views the world.

This metadata can be used to work out the lighting conditions that our cameras sees. Selecting about 10 pixels randomly distributed across the marker will work fine, but more can help stabilize the results. There should be a good range of sample colours, some light, some dark and some in between. It is also a good idea to take colour samples that have large areas of the same colour. This way when the tracking library isn't 100% spot on it should still pick up similar colours.

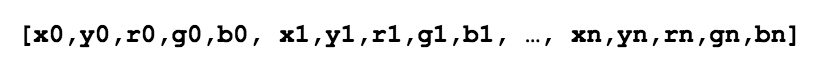

We should now have a collection of samples in the following format:

To be able to sample pixels from the camera buffer we need to transform our metadata points from marker space to camera space. This is achieved by using a transformation matrix from our AR tracking library. Every point multiplied by this matrix will project our samples to the correct pixel location in the camera buffer.

Both the metadata and the camera samples are passed onto a colour difference function. This function calculates the relative differences in colour values between our metadata and our camera samples. These differences are accumulated together (with some additional weighting values factored in) to produce two output colours: the whitepoint and the blackpoint. These two colours contain all the information about how the camera sees the world. The whitepoint determines the cameras brightness and colour temperature. The blackpoint determines the cameras ambient lighting.

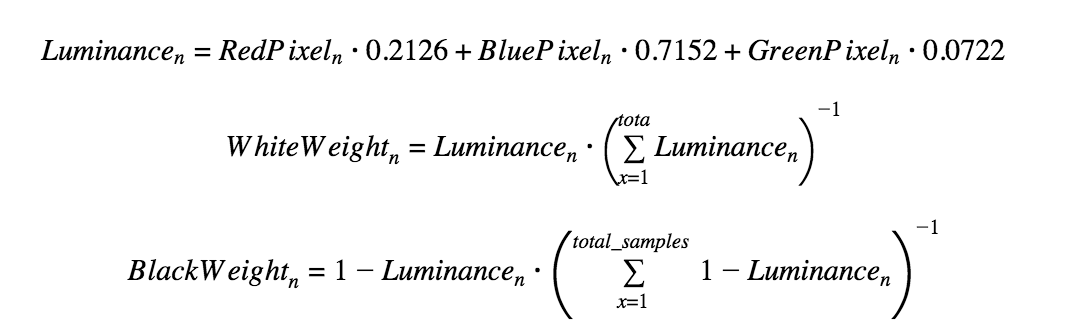

It is important that the whitepoint and blackpoint are calculated accurately so when accumulating the colour differences together they are weighted by some factor. This factor depends on how light or dark our original marker samples were. Lighter colours will contribute more to the whitepoint and darker ones to the blackpoint. The formula we use to calculate the weighting factors is as follows:

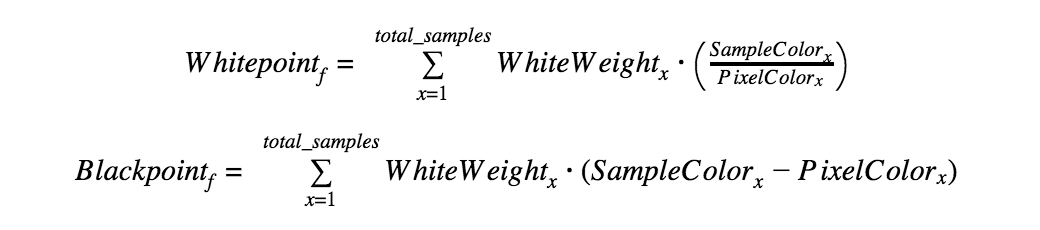

With these weighting factors we can calculate the two output colours with the following equation:

The last step is to take the two colours and adjust all of our digital content. This is a pretty simple step that modifies all pixels from the digital content using the following formula:

This will adjust all content to match the camera lighting. This procedure is repeated for every frame that our marker is tracked so change to the lighting over time also changes our content.

The one last step that we use here at Blippar is a smoothing filter of our whitepoint and blackpoints over time. This makes sure that there are not any harsh flickers or transitions when we relight our content and this matches closer to how the cameras pick up quick changes in light adjustments.

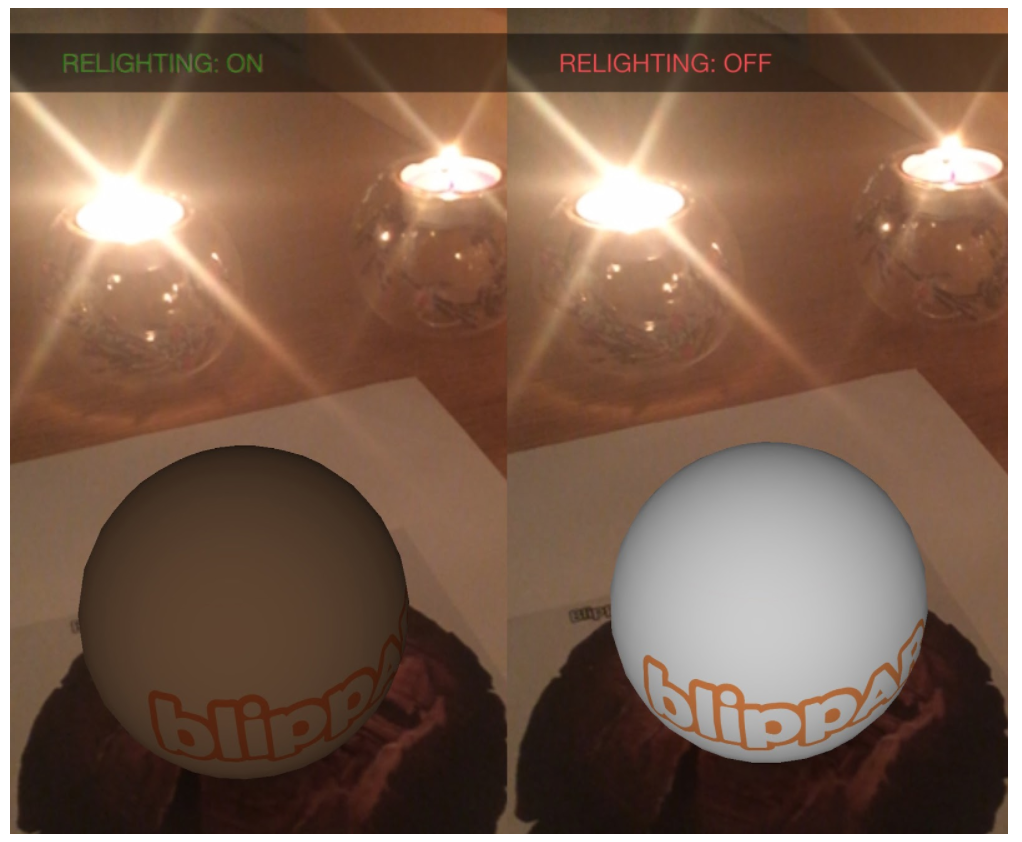

Image showing the relighting technique running and without the technique.

LIMITATIONS

As with any idealised system, nothing ever works perfectly, there are some drawbacks when using this technique:

Marker tracking lost – when there is no longer a marker to track then all calculations will stop. Depending on the use case, content may revert back to its unlit state, stay at the last known adjustment state or some blend of the two.

Obscured Marker – obscuring the marker with other objects/hands etc. will affect the relighting calculation because the camera samples will not sample from the marker but from objects in front of it. This will cause anomalous lighting artifacts on the AR content.

Marker Quality – the quality of the marker itself can have a detrimental effect. Markers that contain lots of high contrast in small areas of the marker, or markers that are overly simple with too few colours (think Pepsi logo) can impact the quality of the relighting calculations.

Accurate Colour Reproduction – as we need to sample pixels as weighting points for our calculations, if those samples do not accurately reflect the exact colours found on the marker, perhaps through RGBA to CMYK conversion issues or how well a printer can replicate colours, then this can impact the relighting calculations.

Markers from digital screens – markers on digital screens are not at all good for relighting purposes because the marker is made up of emitted light from a display. This generally means that the markers surface does not gain enough light from external sources to actually contribute to the relighting calculations.

Predefined tracked marker only – this technique only works on objects that are known beforehand such as images where the colours can be stored in some form of metadata. This technique could not be used with AR tracking technologies such as SLAM where expected colour values are not known beforehand.

FUTURE DEVELOPMENT

There are various improvements that could be made to this technique; some would have more benefits than others. At the moment we are manually generating the metadata from our marker but this could easily be automated. A service could analyse any input image and pick some good pixel samples. This data could be bundled together with every marker we have stored on our servers, resulting in a feature that could work with every marker we have in our database.

Another possible development could be improving how we apply the lighting adjustments to our AR content. We could do reverse light source determination, whereby we infer the direction of a light source based on specular highlights found on a marker. This can be used to light all content from a virtual directional light instead of applying the adjustments to all rendered objects.

If you are a developer looking to create dynamic AR, sign up to our Blippbuilder Script and get started with it today!